Here’s a recap of the biggest updates from Google Search On 2022 event, including Multisearch, Lens, autocomplete, search filters and more.

At the Search On 22 event today, Google announced a number of new features across Google Search, Google News, Google Shopping, and beyond. Let’s go through some of the features that Google spoke about today.

One note: unlike the Search On events we had in the past, it seemed like this event was more focused on maybe more minor features versus Google announcing some major breakthrough in search. Such as with the past announcements with BERT, MUM, and other AI-based advancements in Google Search.

1. Multisearch expanding

Google is expanding multisearch to 70 new languages in the coming months. Google launched multisearch last year for English and U.S.-based queries.

What is Google multisearch. Google multisearch lets you use your camera’s phone to search by an image, powered by Google Lens, and then add an additional text query on top of the image search. Google will then use both the image and the text query to show you visual search results.

How Google multisearch works. Open the Google app on Android or iOS, click on the Google Lens camera icon on the right side of the search box. Then point the camera at something nearby or use a photo in your camera or even take a picture of something on your screen. Then you swipe up on the results to bring it up, and tap the “+ Add to your search” button. In this box you can add text to your photo query.

You can learn more about this feature here.

2. Multisearch near me coming soon

Last year, Google previews multisearch near me at Google I/O. Well, Google is going to launch that feature in the English and U.S. search results in the coming months. Google technically said it will launch late fall of 2022.

What is near me multisearch. The near me aspect lets you zoom in on those image and text queries by looking for products or anything via your camera but also to find local results. So if you want to find a restaurant that has a specific dish, you can do so.

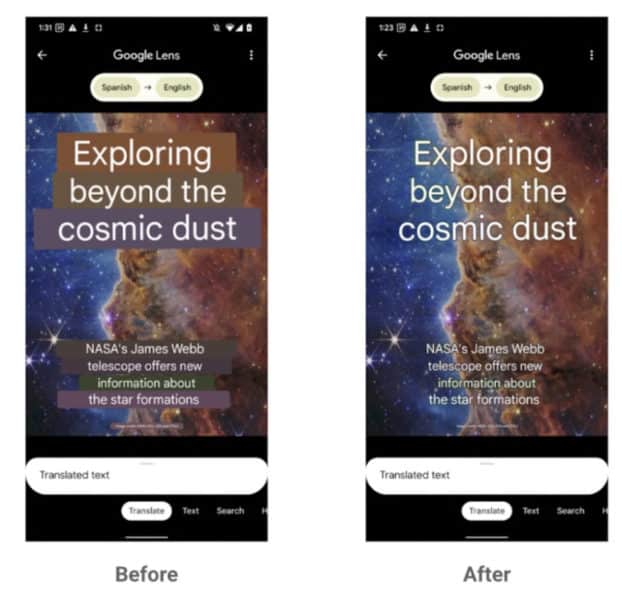

3. Google Lens translated text now cleaner

Google Lens is so much fun and it lets you point your camera at text in almost any setting so you can translate that text. Now, Google Lens is going to present that translated text in a cleaner and more blended approach. This is launching later this year.

Google is using generative adversarial networks, also known as GAN models, to present the translated text better. It is the same technology Google uses in the Pixel devices as the “Magix Eraser” feature on photos.

Here is a sample showing how Google Lens is overlaying the translation in an easier way for searchers to comprehend:

4. Google iOS App shortcuts

Today, the Google Search app for iOS will add shortcuts to make it easier for searchers to search, translate, use voice search, translate, upload screenshots, and more. Here is a screenhot of some of those shortcuts in action:

While this is launching today in the US for iOS users, it will be coming to Android later this year.

5. New Search refinements

Google is also rolling out new search refinements and aids for when you search in autocomplete and within the search results. Now as you type your query, Google will present tappable words to build your query on the fly. This is a form of a query builder, by simply just tapping on words.

Here is a GIF of it in action:

You will also see richer information show up in the autocomplete results as you type.

Google also has the ability to refine your query after you search by letting you add or remove topics you want to zoom into or out of. It helps you drill down into what you are looking for by adapting the top search bar to be more dynamically driven.

Here is a screenshot of that top bar with refinements:

100vw, 800px” data-lazy-src=”https://searchengineland.com/wp-content/seloads/2022/09/search-refinements-bar-800×337.png” /></figure>

<h2>6. More visual information</h2>

<p>Google is showing for some queries a more visually designed approach to the search results. This allows you to explore more information about topics around travel, people, animals, plants and so on.</p>

<p>Google will show you visual stories, short videos, tips, things to do and more, depending on the query. Google will also visually highlight the more relevant information in this UX.</p>

<figure class=)

100vw, 600px” data-lazy-src=”https://searchengineland.com/wp-content/seloads/2022/09/visual3-600×600.gif” /></figure>

<h2>7. Explore as you scroll</h2>

<p>As you scroll through the Google Search results, sometimes the more you scroll, the less relevant the results get. I mean, that makes sense, Google should be ranking the most relevant information at the top.</p>

<p>So Google has added a new explore feature to give searchers inspiration around their query but not something that exactly matches their query.</p>

<p>Searchers can use this new explore feature to learn topics beyond their original query.</p>

<figure class=)

100vw, 600px” data-lazy-src=”https://searchengineland.com/wp-content/seloads/2022/09/Search-scroll-to-explore-600×600.png” /></figure>

<p>This is launching for English and U.S. results in the coming months.</p>

<h2>8. Discussion and forums</h2>

<p>Google Search today may show a section for “discussions and forums,” today for U.S. English results. This is to help people find first-hand experiences from people on the topic in various online discussion forums, including Reddit but beyond just a single forum platform.</p>

<p>Here is what that looks like:</p>

<figure class=)

100vw, 521px” data-lazy-src=”https://searchengineland.com/wp-content/seloads/2022/09/discussion-forum-521×600.png” /></figure>

<h2>9. Translated local and international news</h2>

<p>Google will be launching early next year a way to find translated news coverage for both local and international news stories. Using machine translation, Google Search will show you translated headlines for news results from publishers in other languages.</p>

<p>This will give you “authoritative reporting from journalists” directly from the country that is touched by that specific news story.</p>

<p>Here is a screenshot showing “translated by Google” near the headlines:</p>

<figure class=)

100vw, 358px” data-lazy-src=”https://searchengineland.com/wp-content/seloads/2022/09/translated-by-google-358×600.png” /></figure>

<h2>10. About this result displays personalization</h2>

<p>Google is also expanding the <a href=) About this result feature to show if personalization is taken into account.

About this result feature to show if personalization is taken into account.

Google will now show you if the search results are personalized in any way. Plus, Google will give you the ability to turn off personalization or change them.

So if you say you prefer a specific department or brand, within the new shopping features, Google will let you configure that here.

Here is the about this result that says this has been “personalized for you.”

And here is the personalization feature for the shopping results, which we covered in more detail here.

That is most of what Google announced at Search On today related to Search, and here is our coverage on the Google Shopping side.