In the last few days, several noticed that the Google Toolbar PageRank value for www.twitter.com had plummeted to 0 (it’s now back to its previous 9). Was Google punishing Twitter for how things went down with the feed expiration that temporarily derailed Google’s Realtime search efforts? Nah, Twitter’s website has just been suffering from technical […]

In the last few days, several noticed that the Google Toolbar PageRank value for www.twitter.com had plummeted to 0 (it’s now back to its previous 9). Was Google punishing Twitter for how things went down with the feed expiration that temporarily derailed Google’s Realtime search efforts? Nah, Twitter’s website has just been suffering from technical infrastructure issues that are hindering Google’s ability to crawl and index it now that Google is relying on that crawl vs. a direct API feed. This is a great example of how technical infrastructure can make a huge impact in search results and the issues Twitter are experiencing are common to large sites.

A Google spokesperson told us:

“Recently Twitter has been making various changes to its robots.txt file and HTTP status codes. These changes temporarily resulted in unusual url canonicalization for Twitter by our algorithms. The canonical urls have started to settle down, and we’ve pushed a refresh of the toolbar PageRank data that reflects that. Twitter continues to have high PageRank in Google’s index, and this variation was not a penalty.”

Robots.txt file changes? HTTP status code? Unusual URL canonicalization? Let’s run through some of the issues.

How Technical Infrastructure Issues are Impacting Twitter in Search

First, let’s look at the impact of these crawling issues. The toolbar PageRank issue doesn’t really mean anything; what matters is what’s actually happening in search results. As we all do, I did an ego search to check things out. First, a search for [vanessa fox].

100vw, 557px” data-lazy-src=”https://searchengineland.com/wp-content/seloads/2011/07/twitter2.png” /></a></p>

<p>Huh. There’s my profile, but it’s <a href=) partially indexed. It has no description and the title isn’t coming from the Title tag on the page.

partially indexed. It has no description and the title isn’t coming from the Title tag on the page.

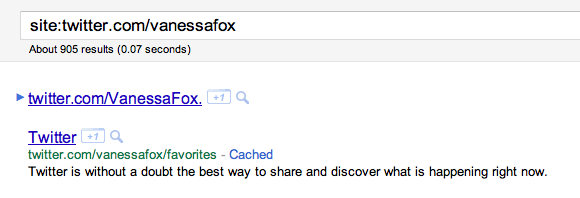

Next, let’s see what happens if I look specifically for that URL.

Well, that’s odd. What’s up with that partially indexed URL with the period at the end and varied capitalization? And what happened to my profile page?

As you can see, something is clearly going wonky with Google’s crawl of the site. What’s going on?

Twitter’s Technical Infrastructure Issues

twitter.com is a really large site. My assumption is that until now, they didn’t have to worry too much about crawling and indexing issues because Google was getting content from their feed. Now that the feed is gone, they are seeing issues that many large sites experience. Below isn’t a complete run down, but rather the things I found on a quick skim of the site.

Different robots.txt Files for WWW vs. Non-WWW

The file at twitter.com/robots.txt looks as follows:

#Google Search Engine Robot User-agent: Googlebot # Crawl-delay: 10 -- Googlebot ignores crawl-delay ftl Allow: /*?*_escaped_fragment_ Disallow: /*? Disallow: /*/with_friends #Yahoo! Search Engine Robot User-Agent: Slurp Crawl-delay: 1 Disallow: /*? Disallow: /*/with_friends #Microsoft Search Engine Robot User-Agent: msnbot Disallow: /*? Disallow: /*/with_friends # Every bot that might possibly read and respect this file. User-agent: * Disallow: /*? Disallow: /*/with_friends Disallow: /oauth Disallow: /1/oauth

However, the file at www.twitter.com/robots.txt file looks as follows:

User-agent: * Disallow: /

What does this mean?

- The www and non-www versions of the site are, in some cases, returning different content (which could indicate larger canonicalization problems).

- Twitter seems to be attempting to canonicalize the URLs on the site by blocking the www version of the URLs from search engines.

- By blocking the www version of URLs, search engines can’t follow the 301 redirects that are in place from the www version to the non-www version, so even though that redirect is in place, it’s being ignored

- PageRank is diluted because some external links are to the www version of a URL and some are to the non-www version of the URL. Since the www version is blocked, the link value is accumulating for each URL separately, but the value to the www version is then thrown away.

This is why you see the www version of my profile showing up for a search for my name, but as partially indexed. That version of the URL likely has more links, so it’s the one that is seen as most valuable by Google’s algorithms, but since it’s blocked by robots.txt, Google can’t crawl it or show a snippet.

The Site is Using 302 Redirects

A fetch for twitter.com/vanessafox results in a 302 redirect to twitter.com/#!/vanessafox. This means that any links to the first URL aren’t being consolidated to the second. A quick fix in this case would be to change those redirects to 301s.

This issue is compounded by the fact that a fetch for www.twitter.com/vanessafox results in a 301 redirect to twitter.com/vanessafox, which in turn results in that 302 redirect to twitter.com/#!/vanessafox. Of course, because the www is blocked by robots.txt, no link value is being passed anyway, but a fix here would be to remove the robots.txt file so Google could crawl the www version, then 301 redirect directly from www.twitter.com/vanessafox to the canonical (twitter.com/#!/vanessafox) or slightly less ideally use two hops but fix the 302.

The Site is Using Google’s Crawlable AJAX Standard, But Is It Using It Correctly?

Twitter URLs are AJAX and use #!, which tells Google to fetch the _escaped_fragment_ version of the URL from the server (which then does some headless browser magic to ensure Google can see the content despite the AJAX). If you want to understand what the heck all that means, you can check out my articles about it or Google’s documentation, but one key issue is how Twitter redirects URLs. As you can see above, they are losing PageRank value by blocking URLs with a lot of links that redirect to the canonical version, but there seem to be other variations of these URLs and it’s not clear that the crawlable AJAX and redirects are working well together.

It looks like what’s happening is:

- For Google only: Google fetches the non-www #! version of the URL (twitter.com/#!/vanessafox), which in turn directs it to the _escaped_fragment_ version(twitter.com/_escaped_fragment_/vanessafox).

- For Google only: Google fetches the _escaped_fragment_ version, which then 301 redirects to the www non-#! version (www.twitter.com/vanessafox).

- For Visitors Only: JavaScript on the page changes the URL back to the #! version.

Garblygook? Maybe looking at the HTTP headers will help:

curl -I twitter.com/?_escaped_fragment_=/vanessafox

HTTP/1.1 301 Moved Permanently

Date: Mon, 18 Jul 2011 20:37:38 GMT

Server: hi

Status: 301 Moved Permanently

Location: https://twitter.com/vanessafox

And here’s the on-page JavaScript code:

<script type="text/javascript">

//<![CDATA[

window.location.replace('/#!/vanessafox');

//]]>

</script>

<script type="text/javascript">

//<![CDATA[

(function(g){var c=g.location.href.split("#!");if

(c[1]){g.location.replace(g.HBR = (c[0].replace(/\/*$/, "")

+ "/" + c[1].replace(/^\/*/, "")));}else return true})(window);

//]]>

</script>

What does this mean? Maybe it’s fine. Twitter is doing this (I guess?) because they want the twitter.com/vanessafox version of the URL to show up in search results. But when someone clicks on that, AJAX on the page takes over and puts the #! back in so the AJAX-y magic can do its thing with the content on the page. It seems overly complicated and since the links are all going to the #! version (since that’s the version visitors see in their browser address bar) and Google can crawl those URLs, why redirect to the twitter.com/vanessafox version at all? As you can see above, that’s introducing the complication of 302 redirects from the URL. The more complication you add, the more chance there is of things going wrong.

A second note about how Twitter is using Google’s crawlable AJAX method is how they are blocking URLs with robots.txt. As you can see above, they are blocking all URLs that include a ? character, but then allowing those URLs to be crawled if they also include the _escaped_fragment_ (meaning, Google has fetched a URL with #! and has subsequently requested the _escaped_fragment_ version). I didn’t see any URLs with ? in them, but if they are blocking duplicate or non-canonical URLs rather than redirecting them or using the rel=canonical attribute, then they could be losing external value much as they are for the www variation of their URLs. Blocking the _escaped_fragment_ version of the URL (rather than the #! version) is the right way to block these pages in robots.txt, but Google did tell me:

“Characters after #! aren’t part of the URL; they’re just a convention to tell Google to use escaped_fragment. So blocking escaped_fragment from being crawled would be enough. But remember too that #! is a signal that a page can be crawled. If you don’t want an AJAX page to be crawled, just use ‘#’ instead of ‘#!’ — and that would be enough to indicate that that AJAX state isn’t meant to be crawled by search engines.””Characters after #! aren’t part of the URL; they’re just a convention to tell Google to use escaped_fragment. So blocking escaped_fragment from being crawled would be enough. But remember too that #! is a signal that a page can be crawled. If you don’t want an AJAX page to be crawled, just use ‘#’ instead of ‘#!’ — and that would be enough to indicate that that AJAX state isn’t meant to be crawled by search engines.”

Certainly it’s the case that # URLs aren’t crawled by default and you need to specifically add this implementation to your server because you want the pages crawled and indexed. However, I can see that some sites might only be able to implement this globally and need to them block a subset.

Is Rate Limiting Causing Issues?

You can see rate limiting in the HTTP headers, which Twitter talks about here.

HTTP/1.1 200 OK

Date: Mon, 18 Jul 2011 20:48:44 GMT

Server: hi

Status: 200 OK

X-Transaction: 1311022124-32783-45463

X-RateLimit-Limit: 1000

Does that mean that Google gets blocked after crawling a particular amount? I’m not sure, but it’s another possible problem thrown in the mix.

URL Casing Causing Canonicalization Issues

As you saw earlier, twitter.com/VanessaFox is showing up search results, as is twitter.com/vanessafox. Both URLs lead to the same place. This is causing yet another PageRank dilution, duplication, and canonicalization set of problems. Best bet here is to normalize the URLs to one variation (the easiest is to pick all lowercase) and then 301 redirect all variations to that. My colleague Todd who helped trace some of these issues wrote a great article that describes how to normalize URLs in IIS. Alternately, Twitter could simply add the rel=canonical attribute to all pages that specifies the canonical version.

What Does It All Mean?

Yup, Google was right. Twitter is having trouble with robots.txt, HTTP status codes, and URL canonicalization. These are problems lots of large sites face and illustrate just how vital crawlable technical infrastructure is to maximum visibility and acquisition from organic search. Toolbar PageRank is the least of their issues.